Representative papers are highlighted.

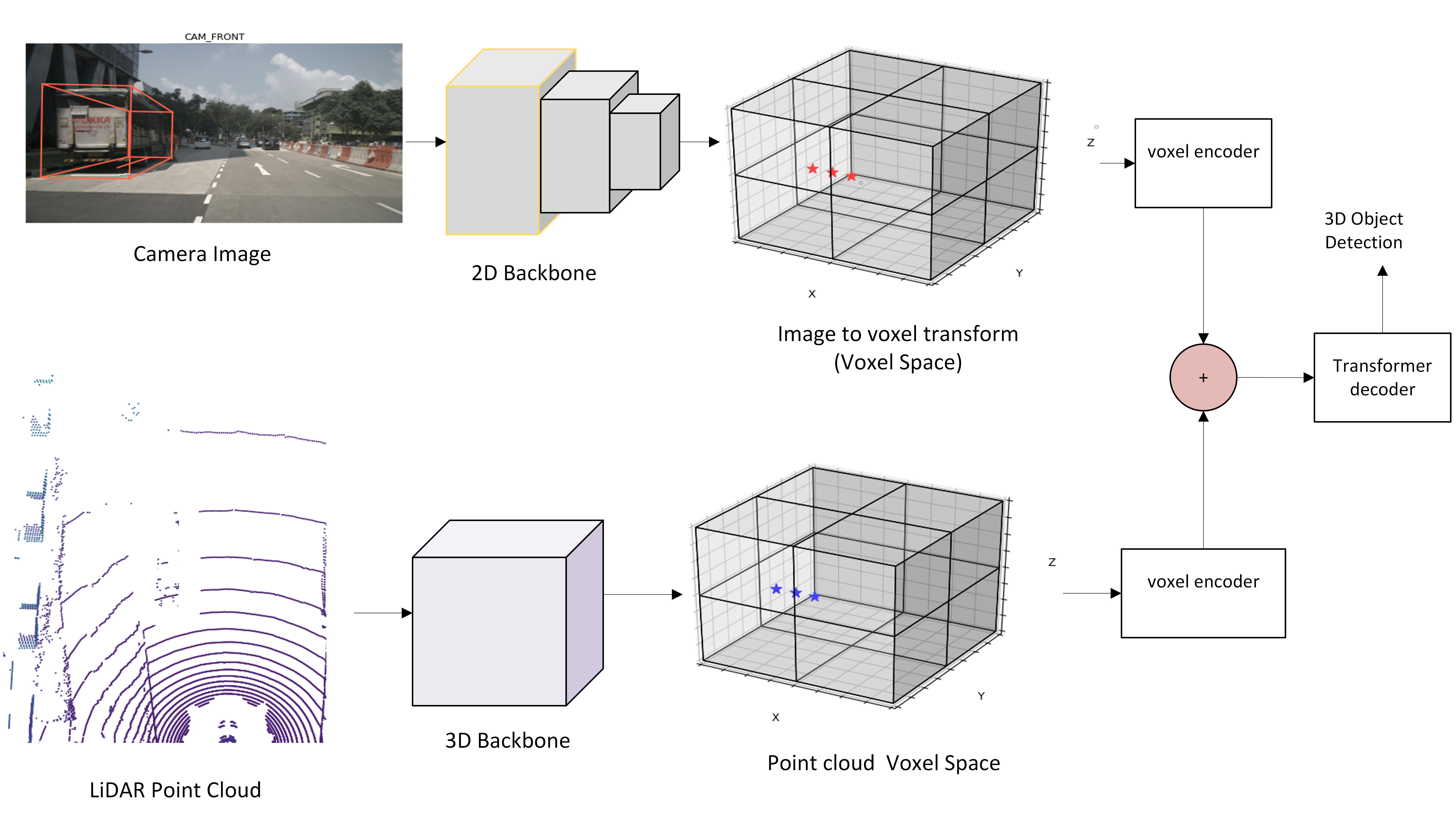

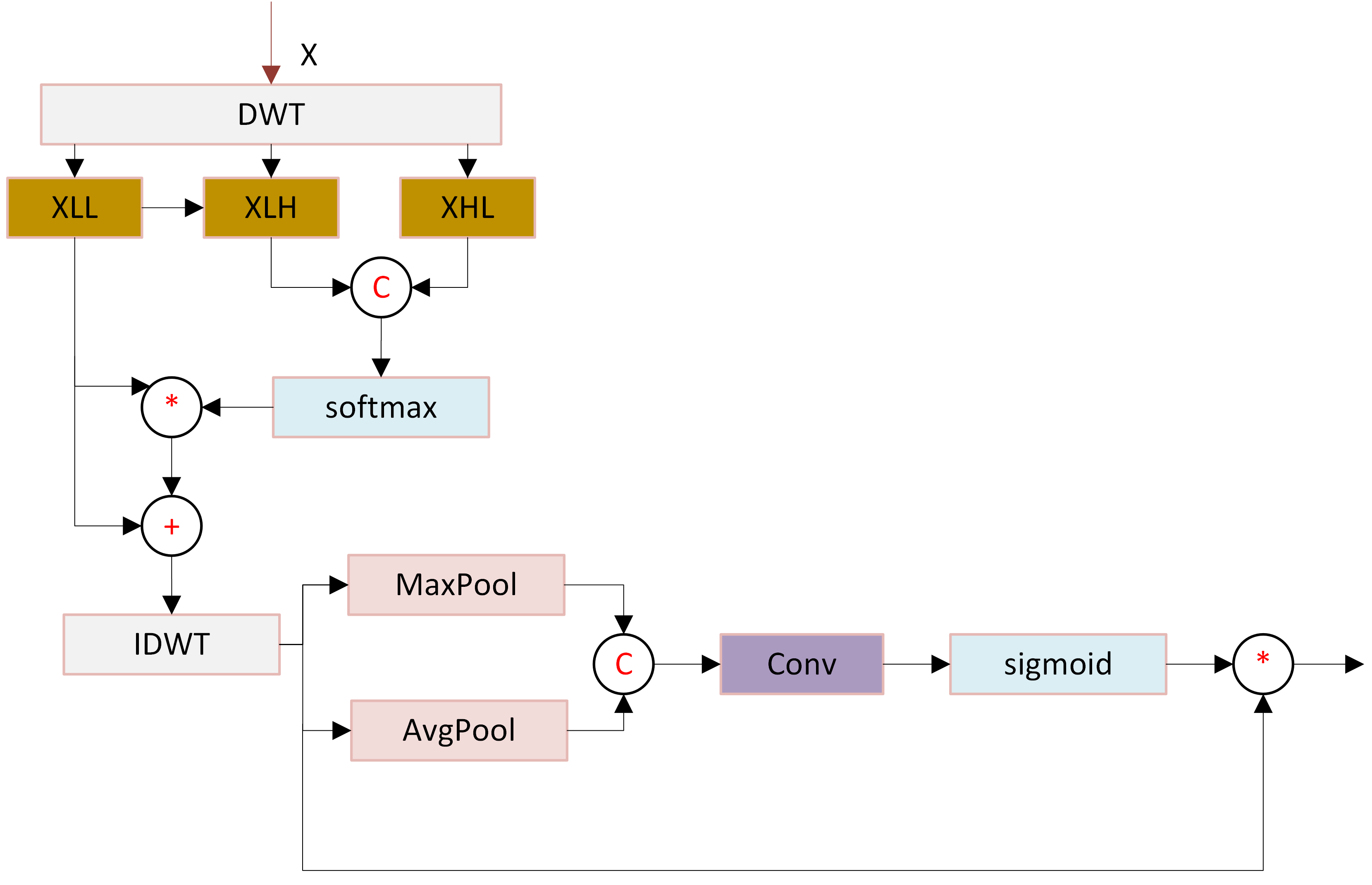

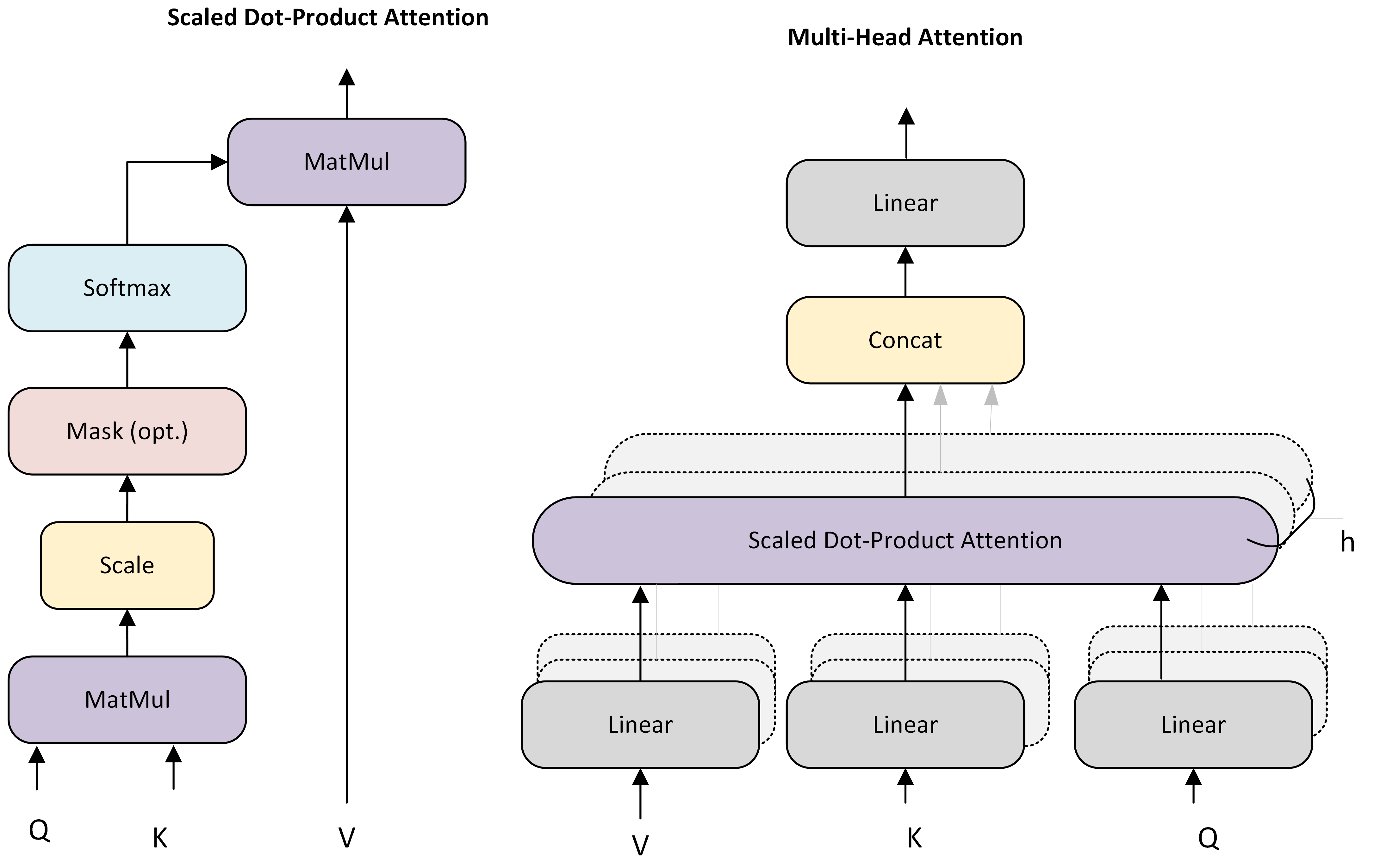

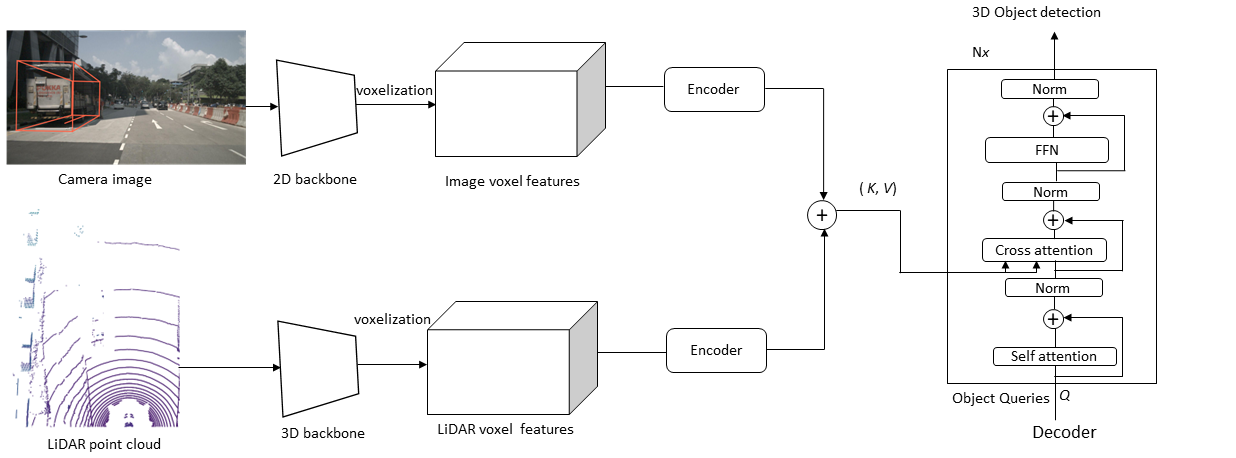

Transformer-Based Optimized Multimodal Fusion for 3D Object Detection in Autonomous DrivingSimegnew Alaba and John E. Ball IEEE Access, April 5,, 2024 3D object detection is crucial for autonomous driving, providing a detailed perception of the environment through multiple sensors. Cameras excel at capturing color and texture but struggle with depth and under adverse weather or lighting conditions. LiDAR sensors offer accurate depth information but lack visual detail. To overcome these limitations, this work introduces a multimodal fusion model that combines data from LiDAR and cameras for improved 3D object detection. The model transforms camera images and LiDAR point cloud data into a voxel-based representation and uses encoder networks to enhance spatial interactions. A multiresolution attention module and discrete wavelet transforms are incorporated to improve feature extraction, merging LiDAR's depth with the camera's texture and color details. The model also uses a transformer decoder network with self-attention and cross-attention for robust object detection through global interactions. Advanced optimization techniques like pruning and Quantization-Aware Training (QAT) reduce memory and computational demands while maintaining performance. Testing on the nuScenes dataset demonstrates the model's effectiveness, providing competitive results and improved operational efficiency in multimodal fusion 3D object detection.

WCAM: Wavelet Convolutional Attention ModuleSimegnew Alaba and John E. Ball IEEE SoutheastCon, April 24,, 2024 Convolutional neural networks (CNNs) excel at extracting complex features from images, but conventional CNNs process entire images uniformly, which can be inefficient since not all regions are equally important. To address this, the Wavelet Convolutional Attention Module (WCAM) focuses CNNs on crucial features, using wavelets' multiresolution and sparsity to guide efficient downsampling without losing details.

Emerging Trends in Autonomous Vehicle Perception: Multimodal Fusion for 3D Object DetectionSimegnew Alaba , ALi C Gurbuz, and John E. Ball World Electric Vehicle Journal, January 7,, 2024 This work provides an exhaustive review of multimodal fusion-based 3D object detection methods, focusing on CNN and Transformer-based models. It underscores the necessity of equipping fully autonomous vehicles with diverse sensors to ensure robust and reliable operation. The survey explores the advantages and drawbacks of cameras, LiDAR, and radar sensors. Additionally, it summarizes autonomy datasets and examines the latest advancements in multimodal fusion-based methods. The work concludes by highlighting the ongoing challenges, open issues, and potential directions for future research.

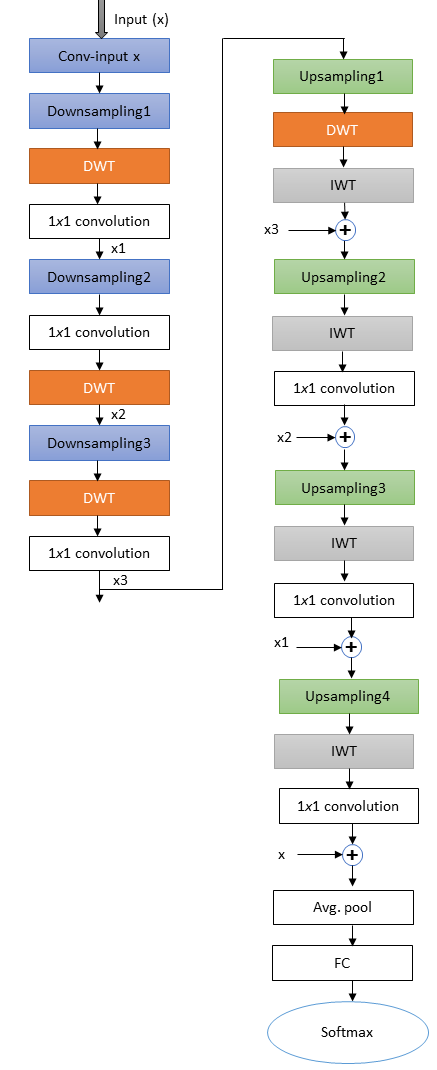

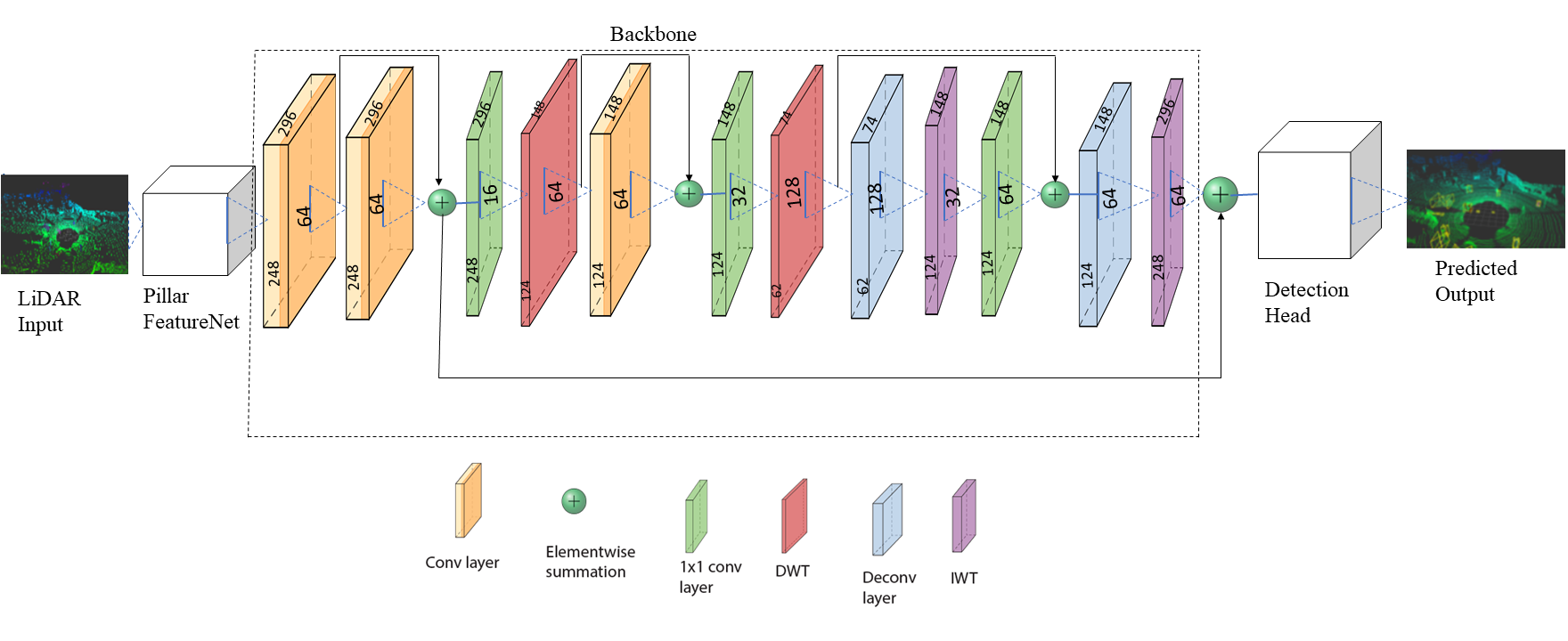

SmRNet: Scalable Multiresolution Feature Extraction NetworkSimegnew Alaba , John E. Ball IEEE ICECET, Cape Town-South Africa, November 16-17, 2023 This work addresses the challenge of creating a lightweight CNN with high accuracy. It replaces pooling and stride operations with discrete wavelet transform (DWT) and inverse wavelet transform (IWT) as downsampling and upsampling operators. This preserves lost details during downsampling, leveraging wavelets' lossless property.

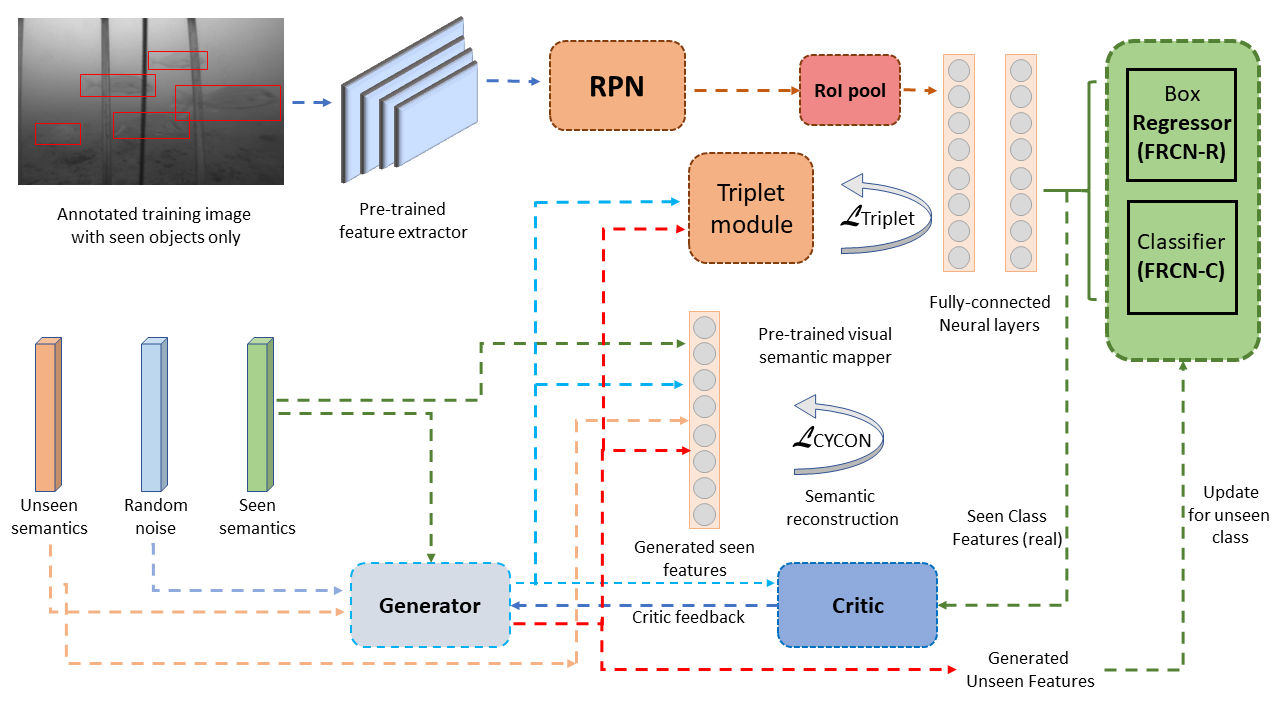

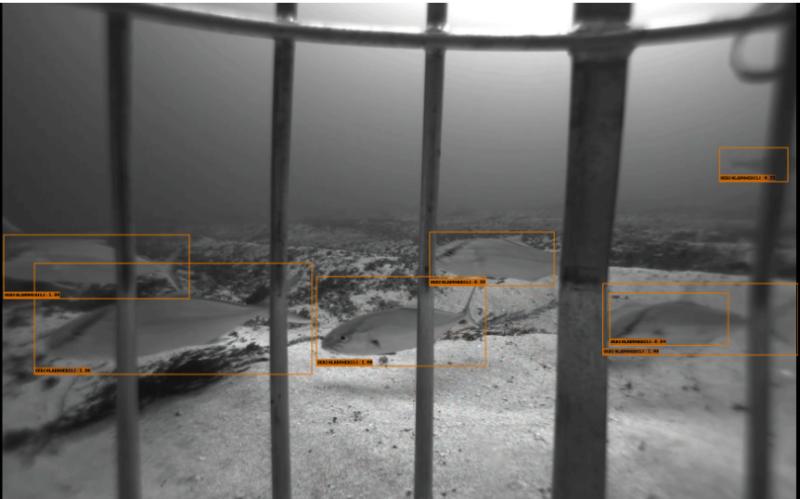

A Zero Shot Detection Based Approach for Fish Species Recognition in Underwater EnvironmentsChiranjibi Shah, M. M. Nabi, Simegnew Yihunie Alaba, Jack Prior, Ryan Caillouet, Matthew D. Campbell, Farron Wallace, John E. Ball, Robert Moorhead IEEE Ocean Conference & Exposition, Biloxi, MS, USA, September 25--28 , 2023 Utilizing Zero-Shot Detection for Fish Species Recognition (ZSD-FR) in underwater environments, this approach can identify and locate objects, even for classes not seen during training. Generative models, like GANs, generate samples for unseen classes by leveraging semantics learned from seen classes.

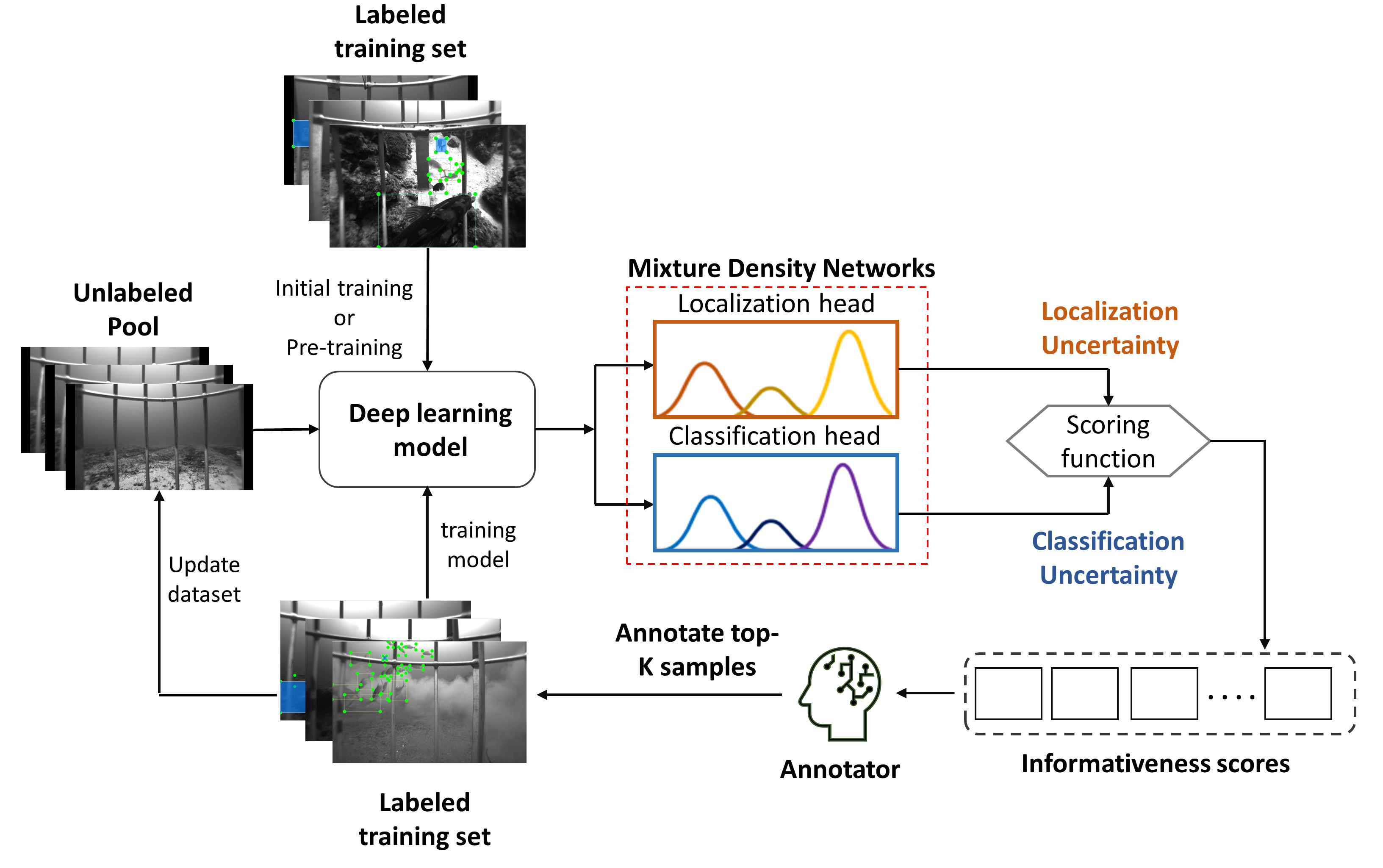

Probabilistic Model-based Active Learning with Attention Mechanism for Fish Species RecognitionM. M. Nabi, Chiranjibi Shah, Simegnew Yihunie Alaba, Jack Prior, Matthew D. Campbell, Farron Wallace, Robert Moorhead, John E. Ball IEEE Ocean Conference & Exposition Biloxi, MS, USA, September 25--28, 2023This work introduces a deep-learning fish detection and classification model with the Convolutional Block Attention Module (CBAM) for enhanced detection. It employs a cost-efficient Deep Active Learning approach to select informative samples from unlabeled data. Probabilistic modeling, using mixture density networks, estimates probability distributions for localization and classification.

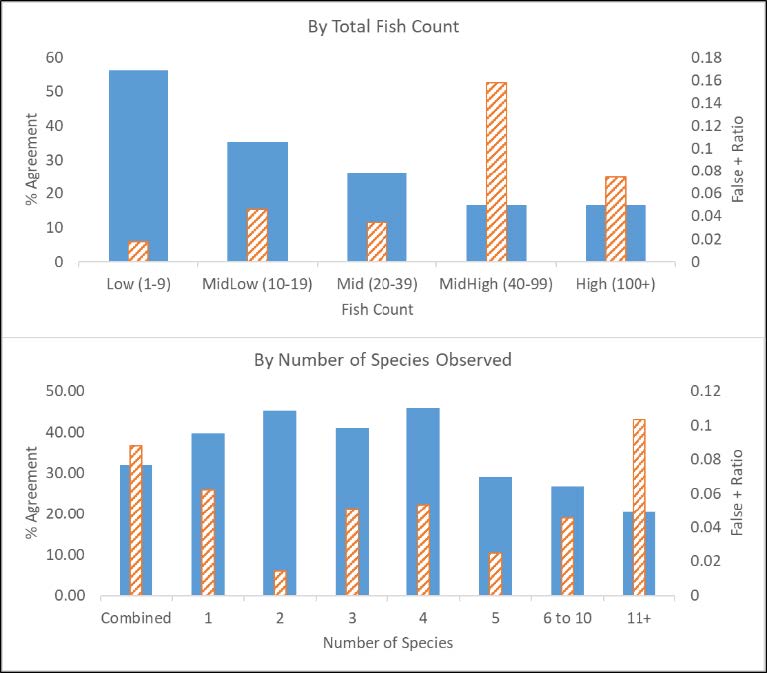

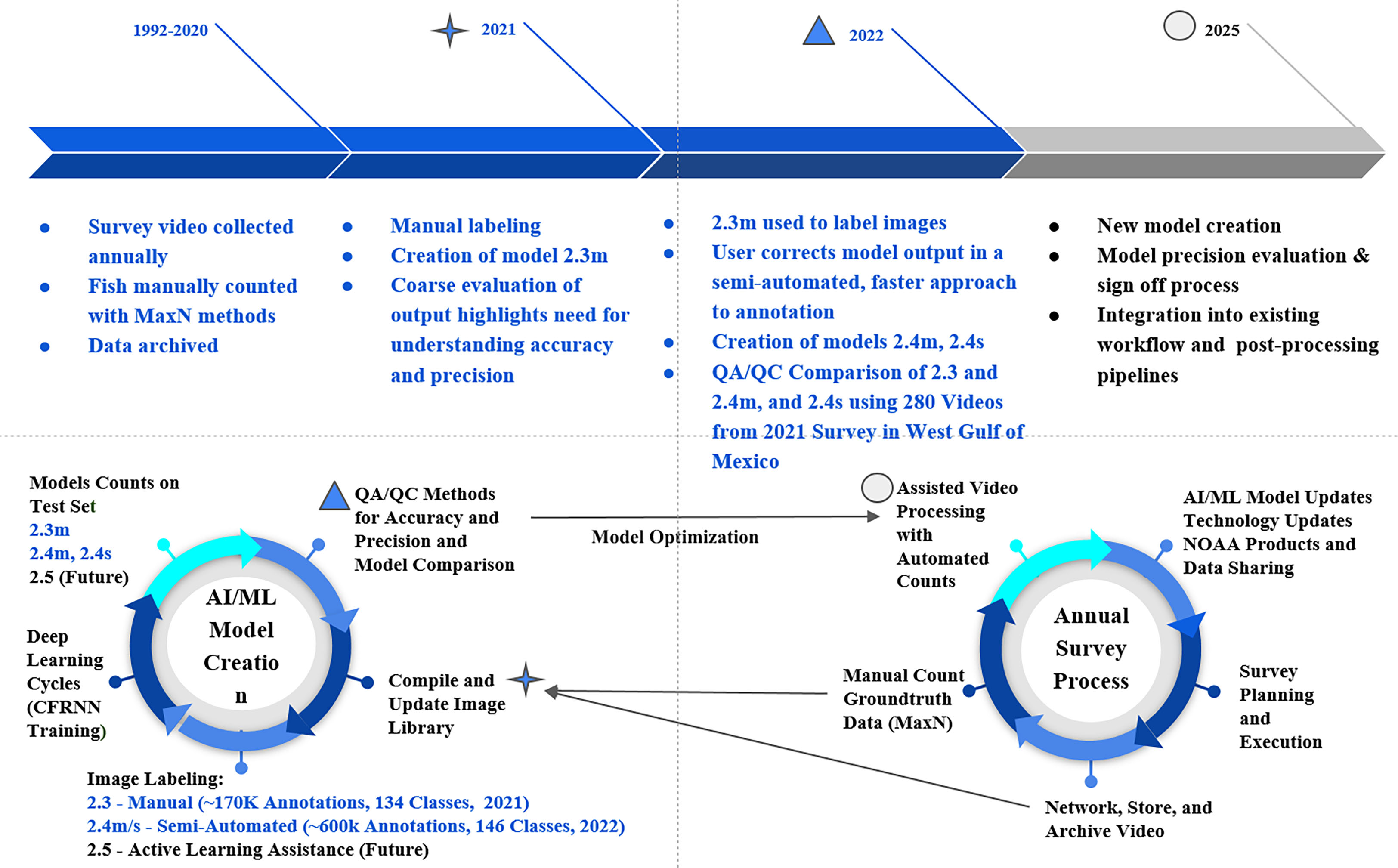

Optimizing and Gauging Model Performance with Metrics to Integrate with Existing Video SurveysJack Prior, Simegnew Yihunie Alaba, Farron Wallace, Matthew D. Campbell, Chiranjibi Shah, M. M. Nabi, Paul F. Mickle, Robert Moorhead, John E. Ball IEEE Ocean Conference & Exposition Biloxi, MS, USA, September 25--28, 2023 Automated deep-learning models offer efficient fish population monitoring in baited underwater video sampling. Precise results are essential, and otolith age-reader comparison helps evaluate model performance. Increasing annotations improve most species' performance, but challenges remain with occlusion, turbidity, schooling species, and cryptic appearances. Red Snapper's multiple-year evaluation highlights location, time, and environmental considerations. Adapting active learning algorithms can enhance model efficiency. These quality assessment methods aid in integrating automated methods with manual video counts.

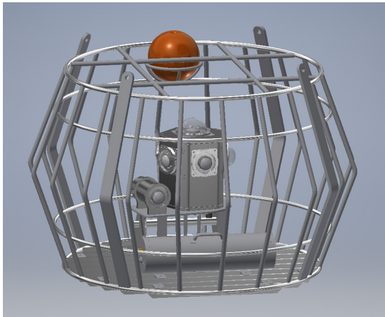

Multi-sensor fusion 3D object detection for autonomous drivingSimegnew Yihunie Alaba, John E. Ball Autonomous Systems: Sensors, Processing and Security for Ground, Air, Sea, and Space Vehicles and Infrastructure, Orlando, FL, USA, April 30 – May 4, 2023 A multi-modal fusion 3D object detection model is proposed for autonomous driving, aiming to leverage the strengths of LiDAR and camera sensors.

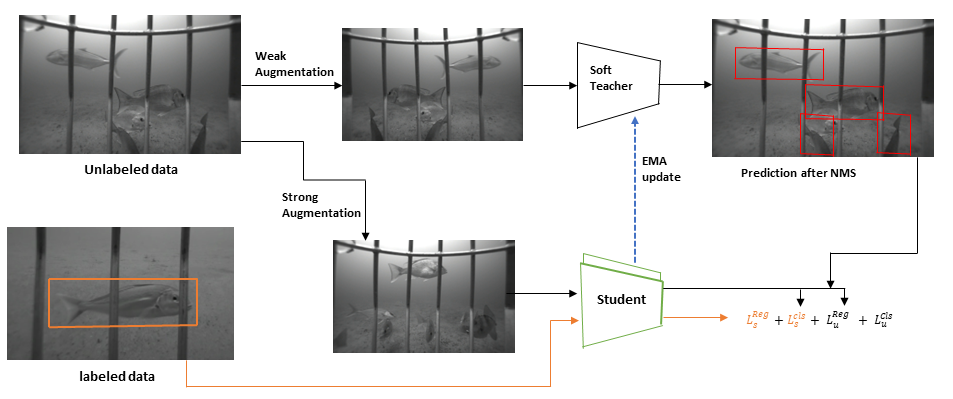

Semi-supervised learning for fish species recognitionSimegnew Yihunie Alaba, Chiranjibi Shah, M. M. Nabi, John E. Ball, Robert Moorhead, Deok Han, Jack Prior, Matthew D. Campbell, Farron Wallace Ocean Sensing and Monitoring XV: Machine Learning/Deep Learning 1, Orlando, FL, USA, April 30 – May 4, 2023 A semi-supervised deep-learning network is developed for fish species recognition, which is crucial for monitoring fish activities, distribution, and ecosystem management.

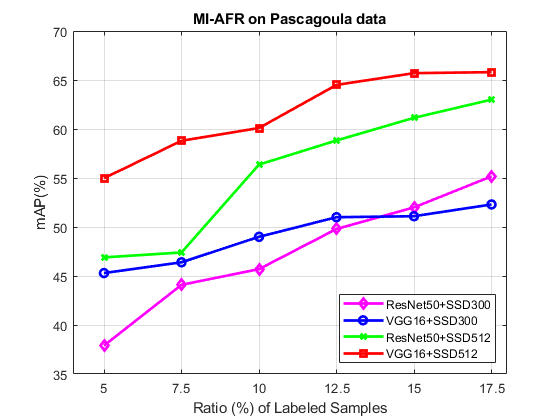

MI-AFR: multiple instance active learning-based approach for fish species recognition in underwater environmentsChiranjibi Shah, Simegnew Yihunie Alaba, MM Nabi, Ryan Caillouet, Jack Prior, Matthew Campbell, Farron Wallace, John E Ball, Robert Moorhead Ocean Sensing and Monitoring XV: Machine Learning/Deep Learning 1, Orlando, FL, USA, April 30 – May 4, 2023 This work introduces MI-AFR, an object detection-based approach for fish species recognition, offering an efficient solution to automate video surveys and reduce processing time and cost.

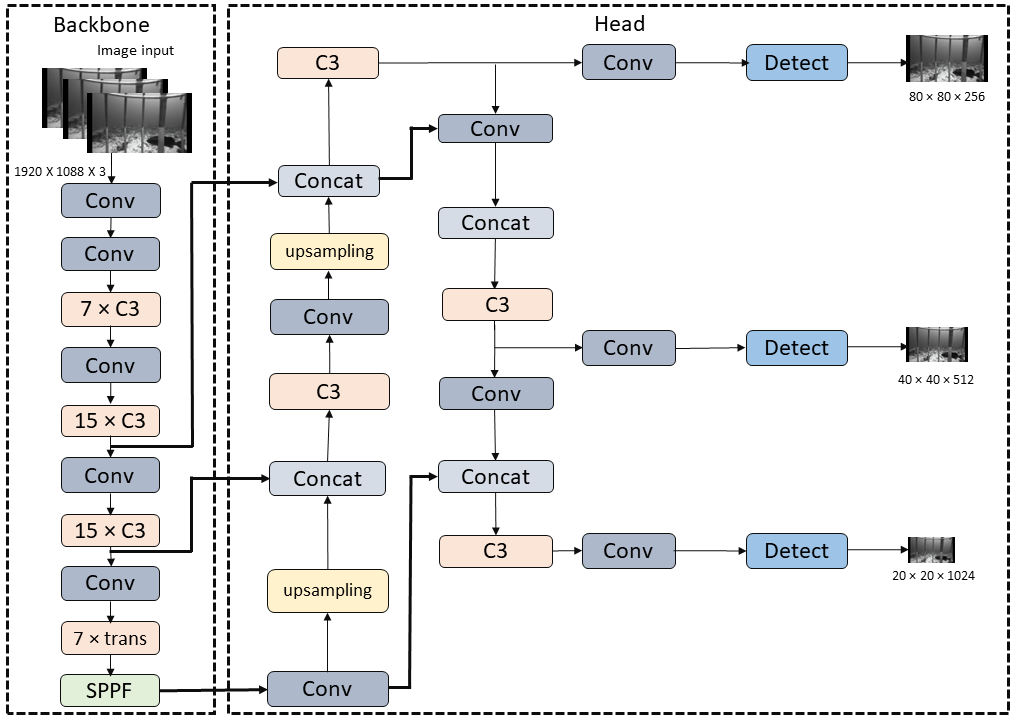

An enhanced YOLOv5 model for fish species recognition from underwater environmentsChiranjibi Shah, Simegnew Yihunie Alaba, MM Nabi, Jack Prior, Matthew Campbell, Farron Wallace, John E Ball, Robert Moorhead Ocean Sensing and Monitoring XV: Machine Learning/Deep Learning 1, Orlando, FL, USA, April 30 – May 4, 2023 This work presents YOLOv5, an object detection model tailored for fish species recognition. It addresses challenges in underwater environments and includes modifications to improve performance, such as depth scaling and a transformer block in the backbone network.

Estimating precision and accuracy of automated video post-processing: A step towards implementation of AI/ML for optics-based fish samplingJack Prior, Matthew Campbell, Matthew Dawkins, Paul F. Mickle, Robert J. Moorhead, Simegnew Yihunie Alaba, Chiranjibi Shah, Joseph R. Salisbury, Kevin R. Rademacher, A. Paul Felts, Farron Wallace Frontiers in Marine Science, 2023 This study aims to automate fish habitat monitoring using computer vision algorithms and proposes a novel evaluation method. Models were developed for Gulf of Mexico fish species and habitats, and their performance was assessed using metrics like station matching, false detections, and coefficient of variation.

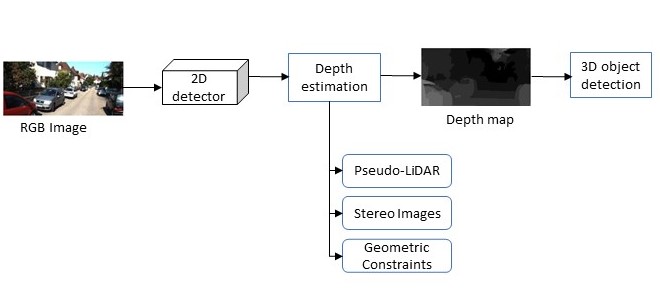

Deep Learning-Based Image 3-D Object Detection for Autonomous Driving: ReviewSimegnew Yihunie Alaba, John E. Ball IEEE Sensors , 2023 This survey focuses on 3-D object detection for autonomous driving using camera sensors. It covers depth estimation techniques, evaluation metrics, and state-of-the-art models. The survey also discusses challenges and future directions in 3-D object detection.

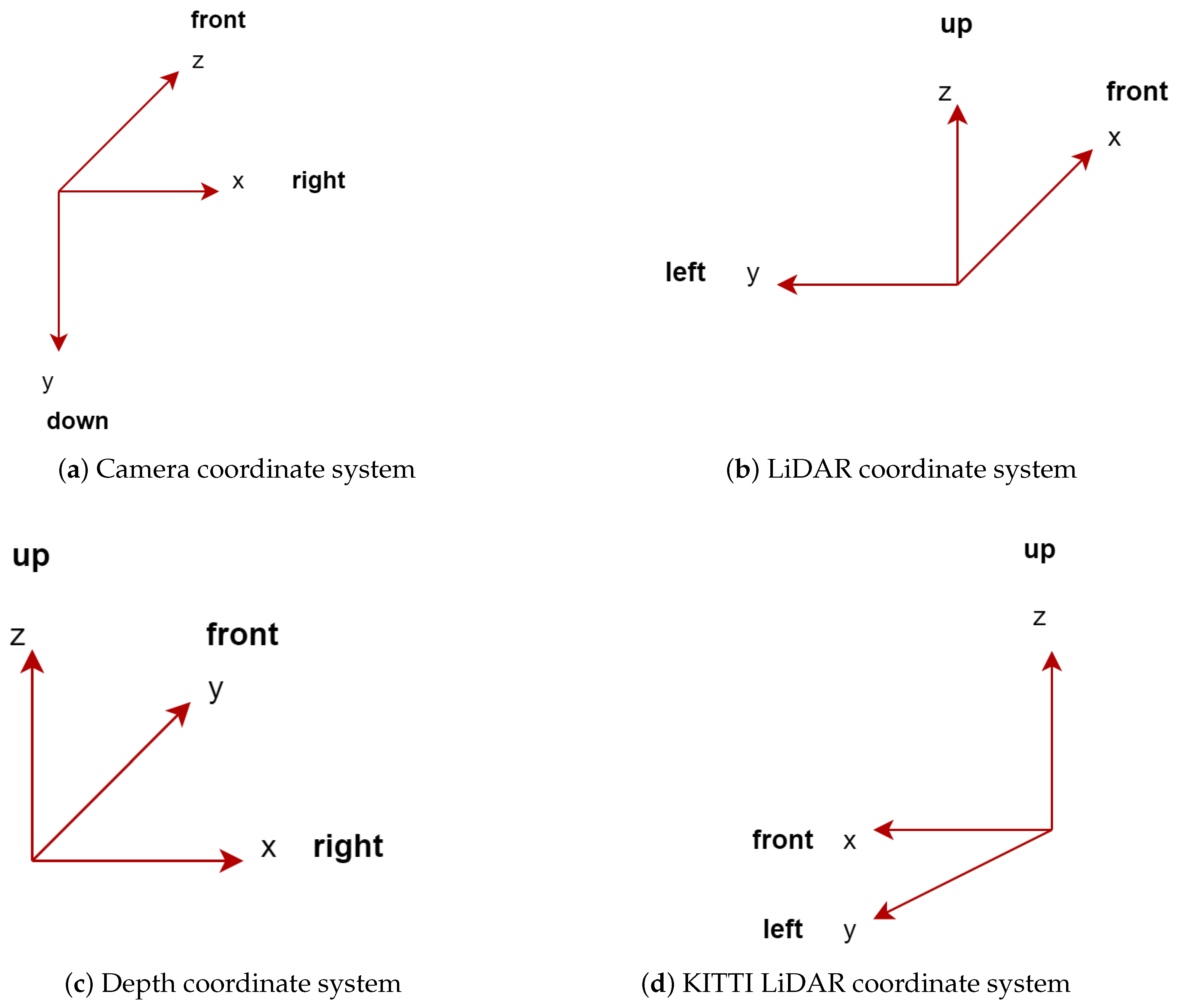

A Survey on Deep-Learning-Based LiDAR 3D Object Detection for Autonomous DrivingSimegnew Yihunie Alaba, John E. Ball MDPI Sensing and Imaging , 2022 This survey focuses on LiDAR-based 3D object detection for autonomous driving. It covers techniques for handling LiDAR data, 3D coordinate systems, and state-of-the-art methods. Challenges like scale change, sparsity, and occlusions are also discussed.

Class-Aware Fish Species Recognition Using Deep Learning for an Imbalanced DatasetSimegnew Yihunie Alaba, John E. Ball MDPI Sensing and Imaging , 2022 This work tackles fish species recognition using object detection to handle multiple fish in a single image. The model combines MobileNetv3-large and VGG16 backbones with an SSD detection head and proposes a class-aware loss function to address the class imbalance.

WCNN3D: Wavelet Convolutional Neural Network-Based 3D Object Detection for Autonomous DrivingSimegnew Yihunie Alaba, John E. Ball MDPI Sensing and Imaging , 2022 This work introduces a 3D object detection network without pooling operations, using wavelet-multiresolution analysis and frequency filters for enhanced feature extraction. The model incorporates discrete wavelet transforms (DWT) and inverse wavelet transforms (IWT) with skip connections to enrich feature representation and recover lost details during downsampling. Experimental results demonstrate improved performance compared to PointPillars, with reduced trainable parameters.

SEAMAPD21: a large-scale reef fish dataset for fine-grained categorizationOcéane Boulais, Simegnew Yihunie Alaba John E Ball, Matthew Campbell, Ahmed Tashfin Iftekhar, Robert Moorehead, James Primrose, Jack Prior, Farron Wallace, Henry Yu, Aotian Zheng IEEE CVPR FGVC8: The Eight Workshop on Fine-Grained Visual Categorization, 2021 We introduce SEAMAPD21, a large-scale reef fish dataset from the Gulf of Mexico, vital for fishery monitoring. This dataset enables efficient automated analysis of fish species, overcoming previous challenges. SEAMAPD21 includes 90,000 annotations for 130 fish species from 2018 and 2019 surveys. We present baseline experiments and results, available at https://github.com/SEFSC/SEAMAPD21. BlogAccomplishmentsVOLUNTEER WORK Science Fair Judge for high school students, Starkville, MS February 22, 2022, and March 7, 2023 Journal Reviewer January 2021 — Present Serving as a reviewer for the IEEE Access journal, IEEE Sensors Journal, and IEEE Transactions on Intelligent Transportation Systems journal. Recognized Works Led a comprehensive workshop on image processing, machine learning, and deep learning for researchers and scientists from the United States Department of Agriculture (USDA). The workshop took place from 10 to 14 January 2022. (Reference: ECE news posted on Feb. 03, 2022) I conducted training on the Practical Atlas HPC application, focused on utilizing the Atlas GPU platform for deep learning applications, on June 08, 2022. Recognized works at university student research: (a) Class-Aware Fish Species Recognition Using Deep Learning for an Imbalanced Dataset (b) WabileNet: Wavelet-Based Lightweight Feature Extraction Network Professional Memberships |